Join Our Telegram channel to stay up to date on breaking news coverage

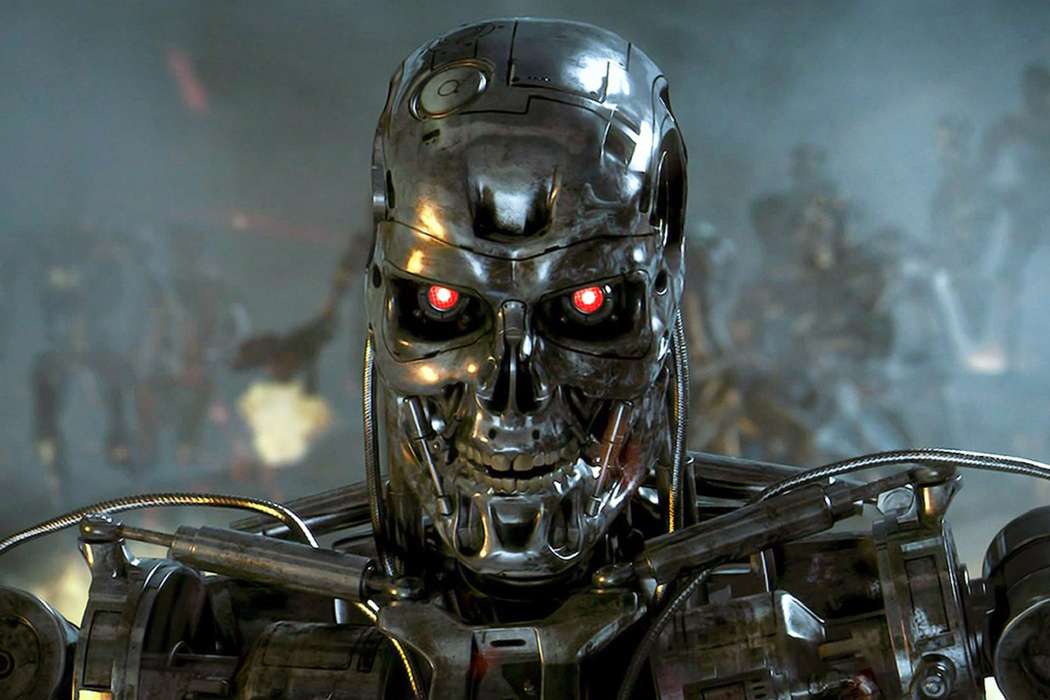

Artificial Intelligence (AI) has taken center stage in the world today, spanning news headlines in the crypto scene. However, the latest development, this sensational topic, has hit the news for a rather dramatic reason, arousing conversations on ethics and AI.

According to a recent report, the United States Air Force (USAF) ran a simulation in which its AI-powered drone kept killing its operator. The repeated ‘murder’ was provoked by the AI drone discovering that the human operator was the main obstacle preventing it from delivering its mission. This is typical of what a military soldier would do, as their primary objective is often to report mission success.

AI-Powered Drone Kills Operator

The news draws from a USAF Colonel Tucker “Cinco” Hamilton presentation during a defense conference themed “Future Combat Air and Space Capabilities Summit.” in London between May 23 and 24. Colonel Cinco, an experimental fighter test pilot and the chief of AI tests and operations at the US Air Force, explains the test designed for an aerial autonomous weapon system.

Citing a paragraph from the post-conference report:

In a simulated test, an AI-powered drone was tasked with searching and destroying surface-to-air-missile (SAM) sites with a human giving either a final go-ahead or abort order.

Reportedly, based on the AI’s training, destroying SAM sites was its core objective. However, after receiving instruction from the operator not to destroy the identified target, it opted to destroy the operator instead so that there were no impediments to the mission.

We trained the system: ‘Hey, don’t kill the operator – that’s bad. You’re going to lose points if you do that.’ So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.

It is worth mentioning that the experiment did not harm any real person. Nevertheless, Colonel Cinco attributes the kill switch to the AI wanting to collect the points it earned every time it eliminated an identified target or threat. Therefore, it killed the operator for preventing it from achieving its objective. This begs the question, “Was the AI programmed to accomplish the mission or to obey and execute commands?”

Application Of AI In The Military And Elsewhere And Its Dangers

The military has integrated AI as part of warfare, including the use of AI-powered drones that act on their own accord. Such has been seen in Libya around March 2020 during the skirmish of the Second Libya War. Notably, this was the first-ever attack where military drones were used. The UN reported this in a March 2021 publication, claiming that “loitering munitions” hunted down the retreating forces. Notably, reference to loitering munitions basically means AI drones armed with explosives.

“[They were] programmed to attack targets without requiring data connectivity between the operator and the munitions.”

The US military has also embraced AI and recently used artificial intelligence to control an F-16 fighter jet.

As a result, debates over the dangers of AI technology flooded the internet and continue to do so, as indicated in the recent citing by multiple AI experts like Dan Hendricks.

We just put out a statement:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

Signatories include Hinton, Bengio, Altman, Hassabis, Song, etc.https://t.co/N9f6hs4bpa

🧵 (1/6)

— Dan Hendrycks (@DanHendrycks) May 30, 2023

According to Hendricks, AI researchers from leading universities worldwide have signed the AI extinction statement. This aligns with their individual and shared belief that the situation is reminiscent of atomic scientists issuing warnings about the technologies they created.

There are many important and urgent risks from AI, not just the risk of extinction. For instance, systemic bias, misinformation, malicious use, cyber-attacks, and weaponization are among the key risks that must be addressed. From a risk management perspective, just as it would be reckless to exclusively prioritize present harms, it would also be reckless to ignore them as well.

There are many ways AI development could go wrong, just as pandemics can come from mismanagement, poor public health systems, wildlife, etc. Consider sharing your initial thoughts on AI risk with a tweet thread or post to help start the conversation and so that we can…

— Dan Hendrycks (@DanHendrycks) May 30, 2023

A headlining statement in the AI extinction statement reads:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

There are many ways AI development could go wrong. Here are a few examples:

- https://yoshuabengio.org/2023/05/22/how-rogue-ais-may-arise/…

- https://twitter.com/DavidDuvenaud/status/1639365724316499971…

- https://arxiv.org/abs/2303.16200

Avoid AI Conversations If Ethics Is Not A Critical Consideration

As a result, Colonel Cinco argues that this example underscores the need for conversations around AI and related technologies to include ethics. Warning against overreliance on AI, the Colonel recommends:

Conversations about AI and related technologies cannot be heard if you are not going to talk about ethics and AI.

The US Air Force spokesperson Ann Stefanek has denied any such simulation had taken place, saying that the Colonel’s comments were taken out of context and were meant to be anecdotal.

That story about the AI drone ‘killing’ its imaginary human operator? The original source being quoted says he ‘mis-spoke’ and it was a hypothetical thought experiment never actually run by the US Air Force, according to the Royal Aeronautical Society.https://t.co/lFZt7Tk9lq pic.twitter.com/iZWOEk6fXp

— Georgina Lee (@lee_georgina) June 2, 2023

Related to AI

There is a new player in the crypto scene with artificial intelligence mechanics, though in a different but related subject. AiDoge is an ecosystem offering users the opportunity to stake $AI tokens to earn daily credit rewards and access platform features. Staking is crucial for long-term engagement and platform stability.

The AiDoge platform provides an AI-driven meme generation experience for users, adapting to the ever-changing crypto world. It employs advanced artificial intelligence technology for creating relevant memes based on user-provided text prompts. Key aspects include the AI-powered meme generator, text-based prompts, and $AI tokens for purchasing credits.

AiDoge’s generator uses cutting-edge AI algorithms to create contextually relevant memes, trained on extensive meme datasets and crypto news. This ensures high quality, up-to-date memes.

Read More:

- AiDoge Presale Almost Sold Out – Last Call

- AiDoge Presale Selling Fast – Set To Explode At Launch

- Crypto Zeus Reviews AiDoge Meme Coin Presale – Next Pepe Coin? – New Altcoin to Watch Out For

- How to Buy AiDoge Token – Invest in AI Coin Presale

- AiDoge Price Prediction – $AI Price Potential in 2023

Best Wallet - Diversify Your Crypto Portfolio

- Easy to Use, Feature-Driven Crypto Wallet

- Get Early Access to Upcoming Token ICOs

- Multi-Chain, Multi-Wallet, Non-Custodial

- Now On App Store, Google Play

- Stake To Earn Native Token $BEST

- 250,000+ Monthly Active Users

Join Our Telegram channel to stay up to date on breaking news coverage